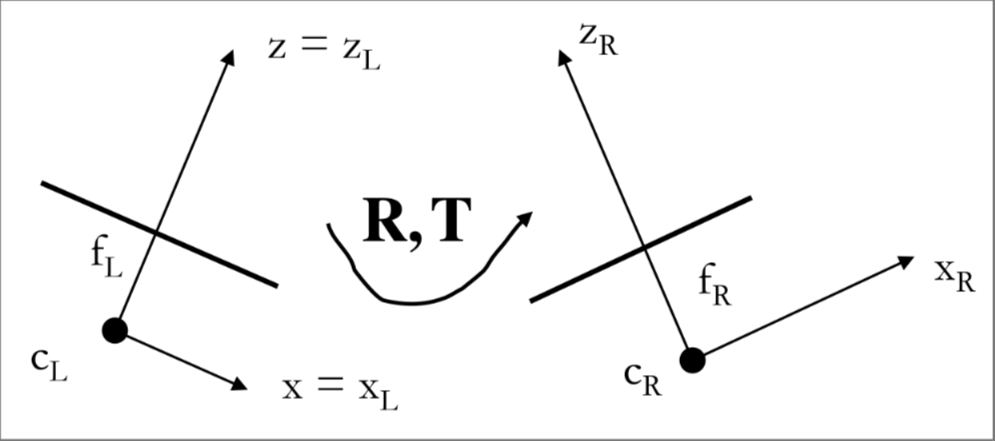

STEREO CAMERA CALIBRATION

WHY NOT CALIBRATE BOTH CAMERAS WITH ZHANG

In order to calibrate a stereo camera system the alone is not sufficient, the rigid motion between cameras is also needed, a first approach could be to compute the zhang’s method for both cameras but this approach as one major flaw, is not robust to noise

BETTER SOLUTION: GUESSING

a more robust solution is to make an initial guess of and by taking pictures of the same planar pattern from the same position with both cameras and then refine the guess by a non-linear minimization of the reprojection error.

The first guess is obtained as the median between the computed by chaining the transformations

Then the guess is refined with Levenberg-Marquardt algorithm

Then for convenience one of the 2 CRF is chosen to be the stereo camera reference frame (SRF), the other camera matrix can be retrieved by the rigid motion matrix between the 2 cameras

RECTIFICATION

For better searching for correspondent points the images need to be perfectly aligned, this is impossible with mechanical alignment so the images are rectified This is done by virtually rotating the calibrated cameras (e.g. redefining the ) about their optical center through an homography

CONSTRUCTING THE MATRIX

so in order to define a new a matrix is arbitrary chosen (e.g. the mean between the )

CONSTRUCTING THE MATRIX

Then a new matrix need to be defined, the first vector is chosen to be parallel to the baseline vector that in the stereo reference frame becomes

Then the first vector is taken parallel to the vector as

the vector is taken to be orthogonal to the vector and to an arbitrary that can be the old axis:

In the end, the new axis is perpendicular to the two vectors so

So the new became:

RECTIFICATION HOMOGRAPHIES

Both images go trough a rotation and a change of intrinsic parameter, so they are related to the originals through homographies

So for the left camera:

for the right image is convenient to move the origin of the WRF into the optical center of the camera

GETTING BACK TO 3D COORDINATES

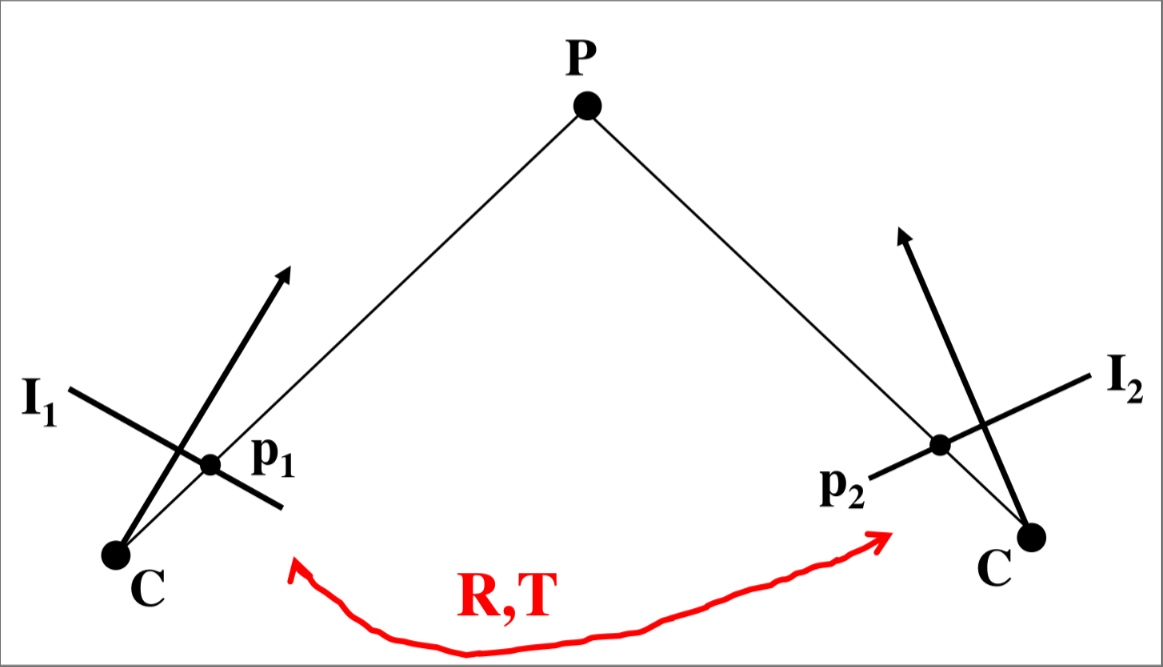

with a calibrated stereo system the depth information and also 3D coordinates can be estimated, so given the relation between 3D point and image points

The coordinates can be retrieved by the following expression

multiplied by

But is the vector of the image coordinates

and given the matrix

The 3D coordinates can be computed as follows

Now it’s also possible to compute an image point of a given 3D space taken by another camera by getting the 3D coordinates and then translating by a rotation and a translation function

It’s also possible to compute it between different cameras